Kubeflow for Machine Learning

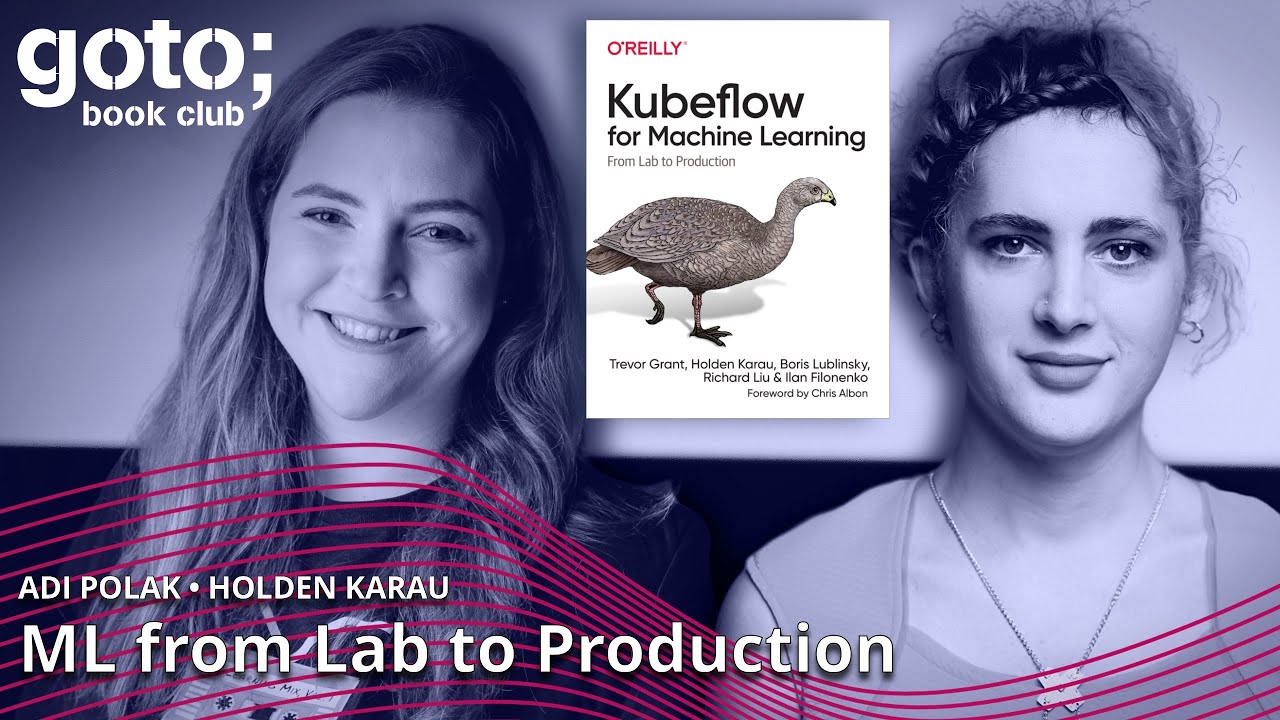

Machine Learning has been declared dead several times but that’s far from true. Join Adi Polak, vice president of developer experience at Treeverse, and Holden Karau, open source engineer at Netflix, in their conversation about Kubeflow and how it provides better tooling in the ML space. The discussion touches on Holden’s book “Kubeflow for Machine Learning” and expands to cover the worlds of Ray and Dask.

Transcript

Machine Learning has been declared dead several times but that’s far from true. Join Adi Polak, vice president of developer experience at Treeverse, and Holden Karau, open source engineer at Netflix, in their conversation about Kubeflow and how it provides better tooling in the ML space. The discussion touches on Holden’s book “Kubeflow for Machine Learning” and expands to cover the worlds of Ray and Dask.

Intro

Adi Polak: Hello, everybody, welcome to "GOTO Book Club." My name is Adi Polak. I'm the vice president of developer experience in Treeverse, which actually means I'm getting paid for working on open source. So this is great fun. And together with me, I have Holden Karau. Hi, Holden.

Holden Karau: Hi, there. I'm Holden Karau. This is Professor Timbit. He's going to be making sure nothing sneaks up on us while we're doing our important discussions. I work at Netflix. I also get paid to work on open source. I enjoy that. I'm very fortunate to have been paid to work on open source for the past seven years. I do feel very, very fortunate to have that life. And I guess we're talking about the Kubeflow book that I co-wrote with some really awesome folks.

Adi Polak: Yes, today's going to be packed with a lot of good stuff. Writing books, where should we start? Like, what inspired you to write the Kubeflow book?

Holden Karau: Well, that's a great question. I don't remember honestly because while I was writing the Kubeflow book, I actually also got hit by a car. So my memory is a little fuzzy because you take a bunch of opiates when you break both of your wrists. I don't remember what inspired me to do it. I think, honestly, probably part of it was I was dating someone who worked on Kubernetes and I was like, "Kubernetes is fun." Also, I was working at Google at the time. I like Kubernetes. I like machine learning. And I really wanted to see if we would get better tools for sort of integrating data preparation with the rest of the machine-learning space. I was hopeful that Kubeflow pipelines would be a really good way of doing that. One of the people that I work with from the Spark community had been working on Kubeflow serving over at Bloomberg as well. So that was kind of like, "Okay, cool. Let's write a book on this."

Adi Polak: That sounds like a lot of fun. One of the great perks I think of working on open source is you get to collaborate with a lot of people from different companies.

Holden Karau: Yes.

How does Kubeflow work?

Adi Polak: You get to learn about other people, infrastructure, toolings, what they care about, etc. So going back into Kubeflow, you mentioned it's a combination between the Kubernetes world and serving machine-learning.

Holden Karau: Yes.

Adi Polak: How does it work?

Holden Karau: Oh, that's legit. So underneath the hood, it's changed several times, how it like actively functions. But in practice, I would say the core of Kubeflow, or at least the design principle of Kubeflow is to bring together the different tools that people would commonly use for machine learning and try and provide a partially unified interface for putting these different tools together so that you can use them in pipelines and you don't end up having to, like, have separate deployments to keep track of everything.

The idea is that everything gets deployed together. You can make a pipeline that's going to use the different components inside of Kubeflow. There's also metadata tracking. And there's also an experimentation framework. There are all of these other things that are then built on top of the fact that all of these tools live together inside of the same sort of pipeline experience. Underneath the hood, how it actually works, has changed at least three times, probably more. But ideally, you shouldn't have to know that too much, right? The exact detail of how they're stringing everything together shouldn't matter too much. Early versions of Kubeflow pipelines did everything by passing volumes between the different pods, and that's a little not great. Then they realize, "Oh, yeah, this isn't great," and so they changed how the data flows around a little bit to make it a little bit more scalable. But, generally, you hopefully shouldn't have to notice.

Adi Polak: Interesting. It really takes me into training machine learning over a large set of data. So is that something that Kubeflow supports?

Holden Karau: Sort of, I would say. The answer is sort of. I have obtained the Professor. He is going to explain to us how Kubeflow sort of trains large machine learning. Kubeflow has a few different ways of doing data preparation and a few different ways of doing model training. If you go sort of like with the everything is a volume path, then you're naturally limited to what volumes are. But Kubeflow can also work with object stores, which is much, much better for scalability. And you can do your data preparation with Spark. You can do it with the other tools that I worked on, which I should remember because I worked on it for, like, a year. How do I not remember something that I worked on for a year? It's like some Java internally at Google, externally...Beam, Beam, yeah. So you can use Beam to do your data preparation. And then for the actual, like, model training, you can use all kinds of different things. They can do parallel model training, and it works pretty well.

There are some limitations, I would say. So some of the tooling inside of Kubeflow doesn't scale and other parts do. And it's not always super clear, I would say, which parts are scalable and which parts are not, which is an area with opportunity for improvement, we could say if we're writing an American-style performance review.

The structure of the Kubeflow book

Adi Polak: Interesting. So when designing the book and thinking about table of contents, usages, etc., how did you refer to the different areas where it scale, where Kubeflow scales well, and where it kind of...there's a room for improvement?

Holden Karau: That's legit. So for the table of contents, we didn't really go that way. We sort of just went into the traditional like, "Hey, this is the first thing that you're going to do, right? You're going to do your data preparation and your data cleaning. And then after that, you're going to want to do some kind of model training and you're going to want to also use cross-validation tools." And so it was very much, like, this is probably...we tried to lay it out in sort of the journey of building something.

Of course, we started with a teaser chapter as it were, which used completely non-scalable tools. But you actually got something working quickly because otherwise, you're not going to read 200 pages of text to hopefully train a model. You want to have something with you early on. So chapter two I think was just like, "Hey, look, this works, we promise," and then the next is like X chapters were very much, like, "Okay, cool. So now, let's talk about how you're actually going to do this." So in each chapter, we talked about the different tools that you could use inside of Kubeflow. And we talked about which ones were scalable and which ones weren't inside of each chapter. So you could decide, essentially, if you were working with small datasets, your life is a lot easier and you could go down essentially the faster path to happiness and success. And if you're working with large data sets then you probably got better models out of it but there's a reason why we get paid to do this stuff.

Adi Polak: I think that conclusion of there's a reason why we get paid to do these things, it's a very strong statement.

Holden Karau: I mean, it's fun. It's fun. But it's also, like, there are times when I just wanted to, like, poke my eyes out with a rusty spoon and go live on a farm somewhere. I mean, I have no skills, I would die immediately. Well, maybe like 72 hours. For example, some of the data preparation tools were just like, "Oh, God, what is going on here?" But that's okay. It's okay, right? Like, if it was too easy, we wouldn't need a book about it and I wouldn't have a job. It'd be very sad because I live in America and if I don't have a job, I don't have health insurance. I don't have health insurance, I'll die. So the fact that machine learning is hard keeps me alive.

Adi Polak: Yes, fabulous. Let's continue that path. It always strikes me, that there are people that study machine learning or data science, and then when they actually find a job and they start working in the field, they realize that you know, it's tough. It's more than having already made-up datasets from Kaggle, where it's all fun and nice. So I'm writing a book about Spark and machine learning.

Holden Karau: Yes.

Adi Polak: Yes, thank you so much.

Holden Karau: I'm so excited about your book. It's gonna be awesome. It's gonna be so cool.

Adi Polak: Thank you. I really hope so. There's a lot of good stuff into it. It kind of really speaks on the verge of like machine learning without touching a lot about what is machine learning, so assuming people already know that, and then diving into distributed architecture, graphs, scheduling, and all this good stuff. Actually, one of the areas discusses the distributed TensorFlow approach and Keris. And Keris API actually provides that data set out of the box. So one of the things I wrote there, I said, it provides data set out of the box, which means you don't have to pre-process it, which is awesome for learning but doesn't really happen in real life.

Holden Karau: Yes, in the real world, yeah, no one comes to us. And it's like, "Here's some wonderful data. It's super clean. There are definitely no invalid values. All of the columns are labeled. I know what the schema is. It's great." No, yeah, everything's terrible. But that's okay, right? And I think one of the things that I think about when I think back to university is there's that thing where we learn maybe how things should be or how things could be, and then when we come to the real world, we discover that everything's terrible.

We can make it as things should be, but it's going to take a lot of work. But we'll get there, right? We can get to the idealized clean data set. It's just going to be several, several months of work. I don't know. I think that the space of distributed training is still something that is super open, right? We can use Kubeflow to do our scheduling. We can even just use Spark. We can use Dask. There are all kinds of different tools that we can use to do our data preparation and then do our model training.

There's no clear right way to do things yet. I think that's kind of neat, right? And I hope that we do what we did in the big data space where we all steal the good ideas from each other and then eventually, it doesn't really matter which tool you're using because they're all like close enough, right? It's not stealing when it's open-source. It's...I don't know what the fancy word is, but collaborate effectively, you know.

Adi Polak: Borrowing.

Holden Karau: Yeah, borrowing for keeps.

Adi Polak: Borrowing the concepts.

Holden Karau: Yes.

Adi Polak: Yes, 100%. Now we see the world of big data evolves. I think at some point there was a lot of news about the fact that big data has died.

Holden Karau: Okay, cool.

Adi Polak: Yeah, it fell to the people who make money out of working in the field. But I think it's just being transformed. A little bit of what I feel at the moment is that we're kind of living in a cycle. Machine learning was very big at some point and then we introduced more data tools to handle machine learning and we realized that "Hey, it's great for machine learning, it's also great for analytics," right? So we can build a lot of this Google Analytics stuff because for machine learning it's maybe not ready yet. Then we get another circle of machine learning and now...I believe machine learning and data love, I'd like to call it, but now we're actually in the phase where we're discussing data again, right, metadata, pre-processing, all this good stuff. And that brings me to the books that you're writing now, actually.

Scaling Python

Holden Karau: Of course. And my publisher would be very annoyed with me if I did not mention the books that I was writing right now. So one of them is "Scaling Python with Dask," the other one is "Scaling Python with Ray," very similar concepts. And the other one that I'm most excited about, and I don't have a publisher for it yet... So if you publish kids' books and think that this says children's author, you give me a call, more likely it'll end up being self-published, but that is "Distributed Computing 4 Kids." And that one is less machine learning, that's more just like, "Hey, what's up? I think that functional programming is super cool. I think that if we don't call it functional programming, we can teach children functional programming," and I think it'll be really awesome. And it tries to teach functional programming and distributed computing through Garden Gnomes and Spark. We'll see if it's a success or not but yeah.

There's like a whole bunch of different cool things happening, right? Like, so Ray has...I mean, both Ray and Dask use Apache Arrow pretty extensively. And Spark also has Apache Arrow. And TensorFlow, you can feed Apache Arrow stuff to it as well, right? So I'm really excited that it looks like we're actually finally agreeing on a data format for interchange that isn't CSV. So that's really cool, especially because we can pass these objects around in shared memory.

It's like super painful to do from Java because Java doesn't really like it when you're working with raw memory buffers. But we can finally do it. It's really exciting. The other thing that I'm really stoked about is that one of the things that I remember people were so excited about when Spark added their data from API is they're like, "Oh, my God, I get distributed data frames." And I was just like, "Well, you get something that we call distributed data frames. But you really need to temper your expectations because I know what you're thinking of, and this is not that at all, right?" And then they're like, "Oh, I don't get distributed data frames. Do I?" And I'm like, "Yeah." The cake was a lie. Sorry, I'm old.

But the nice thing about Dask is we finally have distributed data frames. And because Dask has a really good distributed data frame, API that's based on Pandas, Spark was like, "Oh, wow. Okay, we need to catch up." It's also adding a distributed data frame API based on Pandas. This is super great for machine learning because if you're working in one of these places, or you're going to school, right, you probably did a lot of your data and wondering and in preparation with Pandas because it works fine on Kaggle sized data sets.

But then you come to the real world and you're like, "Oh, crap. I have to use these other super janky tools." And I was like, "No, it's cool." We put a fine layer of paint on top and now you can pretend that the six cats underneath is actually just one panda, right? The metaphor is a little fuzzy, but it's exciting because I view it as we are reducing the amount that people have to know, the cognitive load essentially, in the way of people getting to accomplishing their tasks.

So I'm excited that we're making the tools look more familiar. Unfortunately, on the downside, people aren't really going to buy a book about...actually they might. Maybe people would buy a book about distributed Pandas. But I think that even while we're making the APIs look a lot similar and function very similar, the performance is different enough that unfortunately, the abstraction is a little bit leaky. There will be sometimes you realize that your panda is actually six cats and a hamster. And then you'll be like, "Oh, damn it." But that's okay because you won't always have to think of the six cats and hamster together. Sorry.

Adi Polak: Yes. No, it's a great analogy. It reminds me of the YouTube video of the herding cats. Do you know it? We tried to kind of schedule everyone to be in the same place and work together nicely.

Holden Karau: Oh, sorry.

Adi Polak: Yes.

Holden Karau: I had a very brief stint as a program manager. That was where I was like, I'm a professional cat herder and I hate it. So big shout out to program managers out there. Thank you for herding the cats. I cannot do it. I can only herd the computer cats because they're at least semi-deterministic. The human cats are just like 100 times worse.

API Comparison: Ray and Dask vs Apache Spark

Adi Polak: Yes. Well, they're doing a fantastic job. Everyone was saying program managing, is definitely a tough task. Well, thinking back about the abstractions and kind of providing interface on top of that distributed systems and distributed computation in a way that is...you know, the experience is good, people understand it, people have a previous experience with a similar API sometimes can help. And that actually brings me into Ray and Dask. How is their API and usage when you, for example, compare it to what exists in Spark today?

Holden Karau: Yes. So there are certainly some similarities, but there are also some really big differences. So Ray and Dask feel more similar to Spark for me than I think they will for most people because one of the things that Ray and Dask do is they expose lower-level APIs which exist inside of Spark but are not exposed externally, right? So there are these internal task API and we do various things around how we handle task retries and stuff like that. But Ray and Dask just also directly expose those APIs to users, right? And this has ups and downs, right?

On one hand, it means that when people go to use those APIs, there is this sort of additional, like, "What's going on here?" that you have to have this extra mental model around. On the other hand, one of the really cool things that I think we've seen that's been possible because of that is there is a much healthier library ecosystem because the library authors are able to take advantage of certain lower-level properties that they weren't able to when they were building on top of Spark. So that's pretty cool.

API-wise, so Ray has a rapidly evolving data API, I would say. But it's still pretty bad...sorry, it still has a lot of room for growth. For example, you could not use the Ray data API to do word count until, I think, this year, possibly late last year. And that's a pretty basic problem, right? And that's okay because fundamentally, Ray's model is more focused around enabling you to use other tools on top of it, I would say, right? Like, it's very focused on exposing fast conversions to Spark, to Dask, to other tools to make it so that you can take your data in and out of Ray essentially for almost free, and then you can have a really nice experience.

On the other hand, the data API, they're inside of Ray itself. If you come to it from something like Spark, you're going to be sad. With Dask, I would say it's interesting. They have three different types of collections, right? And Dask Bag is similar to Spark RDD. Dask DataFrame is similar to Spark DataFrame, but also more similar to Pandas DataFrame. And then Dask Array is sort of not similar to either of these things but similar to NumPy arrays but distributed. I think there are some places where people are going to be like, "What's going on?" is with partitioning, with query pushdown, and metadata interfaces, and the optimizations just aren't there, right? And that's okay because they're generally a little bit lighter weight in terms of their implementation. This is a pretty common pattern. But there is no automatic filter pushdown in either of these systems, right? Like, that is up to you to push down your filters by hand. So there's that.

Adi Polak: Interesting. There's a way to go, but then beyond the other side, they might have better support for Arrow. I think I've seen...

Holden Karau: Oh, yes.

Adi Polak: Yes.

Holden Karau: Yes, I would just say Dask and Ray both do better jobs of integrating tools outside of themselves, right? Dask and Ray both make it easier to work with other machine learning tools than you would necessarily get from inside of Spark, right? It's definitely a little bit more painful to do that inside of the Spark ecosystem.

Pluggable architecture

Adi Polak: It's a really interesting approach to open source. I know there is pluggable architecture, which is an architecture that enables that collaboration. And you probably know it better than I do, but I don't think I've seen it in Spark, right?

Holden Karau: No, Spark is not really happy about pluggability. Every time we try and make something near pluggable on Spark, it's a battle. I think part of that is from just the culture of the project. And yes, Dask and Ray are very much more modular and like, "Yes, whatever, you can swap your components around. That's fine. That's normal." I think they were forced to do that because they came onto the scene a little bit later and so they couldn't do everything all at once, right, they couldn't boil the ocean. So it was very much like, "Yeah, whatever. These are the parts we provide and you bring the rest."

Adi Polak: Interesting. From what I've seen, it gave way to a lot of other tools in the ecosystem as well to see how they can work together along with Spark and provide a better ecosystem for building data pipelines, for building machine learning pipelines, and everything that comes together. I think the farther we go, the more different connectors and different tooling that enables us to connect with things such as Spark.

I was writing a whole chapter about petastorm specifically in order to bridge from Spark to frameworks such as TensorFlow and PyTorch and enable the two to coexist, which I think is really interesting in how they did it and how they enable actually, you know... How they build it is basically Petastorm is a framework to read Parquet files, which doesn't exist out of the box in TensorFlow and PyTorch. And with Spark, we know the Parquet files are actually the default or kind of also the best practices for saving data. So we kind of omit the CSV option, we push it out of the way.

How to write three books in parallel?

Adi Polak: Going back to your books, I think this is exciting. So how do you organize your schedule to be able to write three books in parallel and also have a full-time job, and take care of Professor?

Holden Karau: Yes, Professor Timbit is very important. I mean, so there's a bunch of different things that I do. So "Distributed Computing 4 Kids," that's a very slower project. It's sort of a long-term project. Actually, the illustrator I'm working with is...she's from Ukraine, so it's on hold right now. Hugs and support to everyone dealing with the terrible shit coming out of Russia right now. I'm so sorry. Anyways, sorry, that detour. So definitely like there's prioritization, right? There's like, "I need to do my job because I like not, you know, getting evicted." It's nice having a place to live. So that takes priority. The Dask and Ray book, I try and set aside a little bit of time each week to work on them.

Part of how the Ray book progresses faster is that I have a co-author. He's really motivated to get it done quickly. So we have a Sync every Friday, and I definitely am just like, "Oh, God, I need to get something done for Friday. Okay." So Thursday night, I'm often like, "Okay, let's do some writing." For the Dask book, I don't have that same pressure. So the Ray book I would say, like, draft-wise we're up to, like, I want to say chapter seven. For the Dask book draft-wise, I'm up to chapter three. And that's just the reality of sort of how my brain works is that, like, next deadline for scheduling. So it's not very effective, but close enough, close enough. But I try and spend, like, maybe a day's worth of work on the books each week.

Sometimes I'm lucky enough and there are intersections with my job, and I can be like, "Oh, cool. We're gonna, figure out how to do this thing," and then I'm gonna write about it. And that happens not as often as I would like but sometimes, sometimes.

Adi Polak: Yes, writing a book is a fascinating journey. How many books did you write so far?

Holden Karau: I don't know. Not a lot. I should know. I'll plug it into the computer. Sorry, the Professor is very upset that there are people in the house who he does not know. So one, two, what's this one? Oh, okay, three, four. I'd say four. Yes, four books. And from that, we could say that the Kubeflow book is the second-worst book I've written.

Adi Polak: Second worst, why?

Holden Karau: Or alternatively, maybe third best would be maybe a happier phrasing. So I would say it's the second-worst, mostly because I think that we did not pick the right time to write it. That's just the reality of it. And so, the concepts are all still super solid. But the first two chapters, which is, in my opinion, like normally where you, like, either get someone or you lose someone, they're out of date. It's really unfortunate because the subsequent chapters are not bad. But the first two chapters, it's very much like, "Hey, here's how we get started with Kubeflow." But now how you get started with Kubeflow is very different.

So that's rough. The worst book/first book that I wrote was for Spark 0.7. I mean, most of those APIs are still there because we're not very good at deprecating APIs inside of Spark. But there are a lot of things that are a lot easier to do now in that book. Like, literally, everything in my first book that I've written now is already like, "Yeah, you couldn't do this. You probably couldn't but you could." That was back when deploying was like, run these shell scripts and SSH. Oh, God, I feel...sorry, memories, memories.

Adi Polak: It's all good. I think, actually, these books are the books that teach us the history and the internals and how things evolved so people can actually appreciate the tools that they have today, you know, people that are now coming in to Spark... I think the project is 10 years old, maybe a little bit more.

Holden Karau: Yes. It's old. I don't know how old though, but yeah.

Adi Polak: I think we're on 13 years if I'm not mistaken. But yes, they can really appreciate the path and the journey that open-source went through and evolving and people such as yourself providing and contributing back and investing time into developing it to make it more friendly. I think it's crucial. It's also some of the risks we take with writing books, right, because it's going to change. This is just the way it is. That's all good. But it actually reminds me of the children's book that you're working on were probably not a lot of things are going to change, right?

Functional programming for kids

Holden Karau: I hope not, right. With the children's book, right, sort of what I've tried to focus on is things that are a little bit more...sort of foundational, right? It's not talking about interesting optimizations or fun and weird things. If we want, I can share my screen quickly. I don't know. But we can see sort of, you know...there's some gnomes, a little bit of their adventure. Tea is an important part of distributed computing. I prefer coffee but garden gnomes prefer tea. We talk about like how they decide on a leader. You know, we talked about like writing things down on separate cards rather than a single piece of paper to talk about how we split things up.

We talk about like sort of how the work is split up between the different garden gnomes, similar to how we split up work between different computers. We talk about map side reductions without using the word map side reduction because like if you told me that when I was in university, I would be like, "What the hell are you talking about?" and wandered away. We talk about garden gnomes falling asleep, which is also like the computer crashes and similar sort of concepts. That's sort of the conceptual side.

Then, of course, we show how these concepts translate into Pi Spark. But we don't talk about filter pushdown or things like that because those are details that could change and also fundamentally, you don't really need to understand them. But, I mean, it's great if you do. But, I think we don't need to teach children how filter pushdown is evaluated. If they're really interested in that, I'm sure they can go out and learn.

Adi Polak: I think that's brilliant. It's really simplified some of the concepts. And the gnomes getting tired and going to sleep is a fantastic analogy into "My machine just crashed because it lacks...oh, there's an outage or something happens and now I need to..."

Holden Karau: EC2, Spark Spike.

Adi Polak: Yes, the love of EC2. Great, this is amazing. I'm definitely looking forward to it. So publishers, children's books publishers out there, please reach out to Holden. She has it almost done.

Holden Karau: And if you're interested, and you're not a children's book publisher, but either way... Oh, and I need to update the image because this image is a little scary, but you can go to distributedcomputing4kids.com and look at the first draft of the gnomes. This is the first draft. The gnomes look a little more chemically induced than desired. But you can give me your email address, and I will selectively contact you in accordance with local regulations, and definitely not send you unsolicited commercial messages. But I will let you know when "Distributed Computing 4 Kids" is available and we can go from there. And yes...sorry, yeah, I just have to push the book a little bit because otherwise who's going to read it? Professor Timbit isn't so good at reading it.

Pain points in data infrastructure

Adi Polak: Hundred percent. Right, let's talk about our last topic for today's conversation. I think it's a very interesting one because it speaks about data infrastructure and its impact and all the great books, Holden, you wrote and your rates and everything that's going to come next. Like, what are some of the biggest pain points that we have in data infrastructure today?

Holden Karau: Yes. So I think there's a whole bunch of different things. I think metadata is something that continues to evolve and we continue to see more need for evolution there, right? So Iceberg, Delta Lake, lakeFS, we're continuing to see a lot of really interesting development. I think tracking data over time, data lineage is definitely something to consider. There are a bunch of early tools. There's Marcus from the people who used to be at...what's that company, WeWork? And there are a lot of really interesting tools in the space. I think there's no clear solution here yet either. But I think it's pretty exciting.

I think we'll see some really fascinating things come there. I think otherwise, like data quality tools also, in general, there's some really interesting stuff coming. I think I signed an NDA about one of them so I can't talk about that one. But I'm really excited, there are some really cool soon-to-be open-source tools. Actually, let me see if it's public. Oh, yeah. Okay, cool, it is. They posted a YouTube video four days ago. So I can't say too much, SodaCL [SP] from some of my friends also looks pretty interesting. It's a DSL for writing sort of assertions about your data.

So you can have them evaluated when you go to save a new table, right? And it can be like, "Hey, what's up? We're not going to save these changes because all of the data is no, nope." And you may think I'm joking but I have caused a serious incident at a company that is one of the largest in the world but... I don't know if I can swear on this, screwing that up before. So I'm excited to see more tooling around correctness and quality, in addition to more tooling around metadata tracking and data lineage tracking. I like not causing production outages is one of my goals, one of my goals. Yeah, cool.

Adi Polak: Yes.

Holden Karau: Is your book in an early release yet?

Adi Polak: Yes, it is. I'm actually going to finish the ninth chapter but there are three chapters out.

Holden Karau: Oh, yeah.

Adi Polak: Thank you. It's slowly progressing. Switching jobs and all that good stuff kind of postponed it a little bit. But it's slowly progressing. I'm actually giving deadlines myself.

Holden Karau: So for people who have access to that O'Reilly learning platform can get the early release copies of your book there, yeah?

Adi Polak: Yes. Also, there's a GitHub repo, which holds all the coding samples, Jupyter Notebooks, and all the good stuff for people to try out. Yeah. Cool, wonderful. Well, I am super excited about your books. I am super excited for, I guess, the fact that you're creating more educational content for people to learn and kind of get a better understanding of how real-world problems are. So it's always fantastic to have that more information out there from experienced people such as yourself. I'm definitely looking forward to reading it. If you're looking for reviewers, everyone at home listens to us and is always happy to take a look provide some insights, and ask good questions.

Holden Karau: Yes.

Adi Polak: Yes, always. And also people at home, if you're interested to learn or to read a little bit more about machine learning with Spark, things that are not the traditional machine learning but actually go beyond and speak of the distributed architecture, etc., you're most welcome, more than happy to get your insights and questions as well.

Holden Karau: Where should people reach out to you if they want to be an early reviewer of your book?

Adi Polak: Yeah, so you're most welcome to reach out over Twitter. That's @Adipolak, A-D-I-P-O-L-A-K. My DMs are forever and ever open.

Holden Karau: Oh, brave.

Adi Polak: I know. This is a decision I made I think three years ago, and I'm sticking to it. So my DMs are forever and ever open. You're most welcome to come and ask questions. I'll share all the G Docs with you or PDFs, or whatever you prefer, and I'd love to get your insight and feedback. Holden, how about yourself, where can people see the early release of your books?

Holden Karau: Yes,, similarly it's in a weird hybrid of Google Docs and GitHub. If you're interested, my Twitter DMs are often open, occasionally closed. You can also just e-mail me. It's just my first name dot my last name, so Holden Karau.karau@gmail.com. I am always happy to chat with people about the different projects that I'm working on. I would love more feedback. I think...big shout out to everyone, whoever is an early reviewer or gives early feedback on a book. Without you, technical books would not be nearly as good as they are. Like, the reviewers for the Dask and Ray book, right, I was going through some of the comments last night, and without them, these books would just not end up nearly as good as they are. So thank you for taking the time, if you're one of those people. Even just like small fixes, like those are valuable. So, keep up the good work, y'all.

Adi Polak: All right, thank you so much for listening. Today with me we had Holden Karau and I'm Adi Polak, and we are hosting the "GoTO Book Club." And it was lovely, lovely to have you, Holden Karau, together with me. So thank you, everyone. Yeah.

Holden Karau: It's really great catching up.

Adi Polak: Likewise, so many...

About the speakers

Open Source Engineer at Netflix